Are you a Quiet Speculation member?

If not, now is a perfect time to join up! Our powerful tools, breaking-news analysis, and exclusive Discord channel will make sure you stay up to date and ahead of the curve.

Nobody enjoys performance reviews. Even if they're positive. There's just something existentially distressing that comes with being assessed, aside from any professional implications stemming from said review. And they're also frequently boring for everyone involved. (From personal experience.) However, they can be useful for fostering improvement. Especially when they're self-administered in a public setting, so there's no weaselling out of self-criticism.

It's been long enough since the release of Modern Horizons 2 for the set to be reasonably explored and integrated into the metagame. There's always cards that get overlooked for years or need help to make the grade, but it looks like things are settling down. Relatively speaking, anyway. Therefore, this is an opportunity to revisit my periodic reflections on how spoiler predictions have played out. However, this time rather than trying to explain or excuse misses, I'm looking for the lessons from MH2 spoiler season. What did we miss, where did we miss, and why did we miss it? I'll also point out what we got right because criticism is easier to take when praise is mixed in.

The Top 5

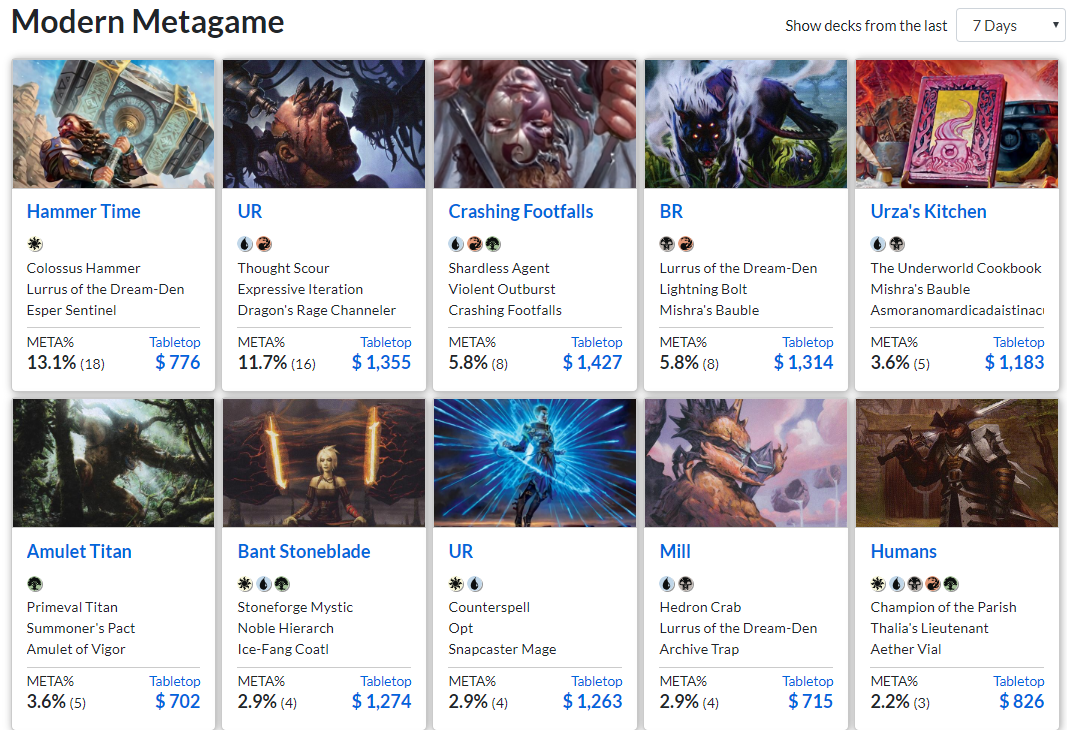

The hardest part of evaluations is figuring out where to start. It's also quite hard to decided how to evaluate something, and criteria setting tends to eat up a lot of time. So I've decided to start as objectively as possible. And that will be working comparatively from a list. Jordan culminated the spoiler season with a Top 5 list, so it's fairly easy to compare that list to the most played cards list on MTGGoldfish and see how he did (According to that list as of Monday, 8/16).

| Place | Jordan's Rank | MTGGoldfish Rank |

|---|---|---|

| 1 | Ragavan, Nimble Pilferer | Prismatic Ending |

| 2 | Abundant Harvest | Endurance |

| 3 | Prismatic Ending | Ragavan, Nimble Pilferer |

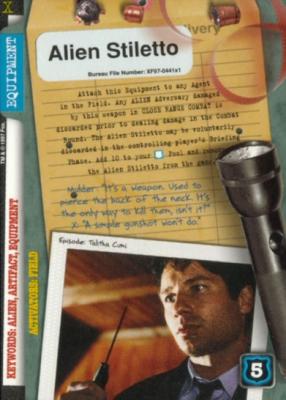

| 4 | Urza's Saga | Unholy Heat |

| 5 | Sudden Edict | Dragon's Rage Channeler |

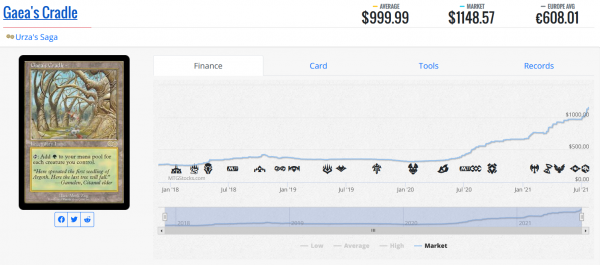

Well, that's quite some variation, but Jordan did get 2/5 which isn't bad considering that these were evaluated without being tested in any tournament settings. That the two that Jordan got right are #1 and #3 is significant, even if they are in reverse order. That said, two out of five is not a passing grade, so shame on Jordan, right? Wrong. I'll admit, Sudden Edict is a huge whiff and has seen play in exactly one deck since release; everyone was high on Urza's Saga and yet it just hasn't worked out. It doesn't even make the MTGGoldfish's Top 50 list. Why? I'd say that the complete lack of splashability Jordan identified is the key. Saga is quite powerful, but accessing that power requires a commitment to artifacts that few decks can muster.

While Harvest hasn't really panned out either, that doesn't make Jordan wrong there either. Abundant Harvest has seen plenty of play since release. The catch is that it's been in a deck that has severely fallen off. Couple that with UR taking all the space for cantrips in the metagame, and there's no place for Harvest. That doesn't mean that Jordan's rating is wrong about what the card is capable of or its power in a vacuum. Things didn't work out the way that we expected.

It's Elementary

Another easy measurement should be the Incarnations list. There were five elemental incarnations and they had obvious power differences, which lent themselves well to ranking. And we weren't the only ones. StarCityGames recently did their Exit Interview for MH2 and ranked the incarnations based on how they've actually played. Averaging their scores yields a consensus place for each. Should be simple to just compare Jordan's list (which I agreed with at the time) to the SCG consensus and see how we did, right? Well, that's one way. However, it's also fair to ask how SCG's commentators did based on their initial impressions. But again, there's the MTGGoldfish ranking based on actual play frequency. Which is the most accurate? How about I sidestep that question and compare our list to all the options?

| Place | Jordan's Ranking | SCG Initial Ranking | SCG Current Ranking | MTGGolfish Ranking |

|---|---|---|---|---|

| 1 | Solitude | Solitude | Solitude | Endurance |

| 2 | Subtlety | Grief | Fury | Fury |

| 3 | Endurance | Subtlety | Endurance | Solitude |

| 4 | Fury | Fury | Grief | Subtlety |

| 5 | Grief | Endurance | Subtlety | Grief |

Jordan and SCG are in lockstep over Solitude as the most powerful. Swords to Plowshares is incredibly strong and having it as a pitch spell is invaluable. Jordan and SCG also agree that Endurance was third strongest. However, the only other point of agreement is with MTGGoldfish that Grief is the worst incarnation. Even the SCG guys were down on Grief compared to their initial impressions, and many admitted to rating it more on the basis on objective power than actual performance. It turns out that perspective and methodology really affect the evaluative process.

What it Means

The point here is that trying to measure spoiler season success in an objective way is quite  hard if not impossible. Everything depends more on how the question is asked and what criteria that question is evaluated with. It's especially unfair since all jokes aside, we're not clairvoyant. There's no way to know how things will actually turn out without practical experience. So there's no objective criteria for success in these things.

hard if not impossible. Everything depends more on how the question is asked and what criteria that question is evaluated with. It's especially unfair since all jokes aside, we're not clairvoyant. There's no way to know how things will actually turn out without practical experience. So there's no objective criteria for success in these things.

However, that doesn't mean that we can't still learn and evaluate our performance. It just won't be in a nice list. This is about looking at what we actually wrote about the cards and whether we did a good job evaluating their potential. Again, did we totally miss on any played cards? What did we overestimate, and why? And what were we on the money about?

What Went Wrong

What Went Wrong

As I see things, it doesn't much matter if we got a card exactly right. Did we evaluate it correctly is what matters. We can't know how anything will play out until the set's released. The final value of a card is not the card's inherent power but contextual power once it finds a home. And we can only guess at that. Who saw Shardless Agent making Crashing Footfalls into a Tier 1 card? However, as long as we said the right things about the card I'm going to count it as a pass. Reading the future is hard, okay? Assess us on the things we could control. Thus, after going through everything that we both said about MH2, here's my evaluation of our performance.

Did We Miss Something Playable?

The biggest thing is was anything overlooked? That's easy to determine and also the biggest mistake we could make.  And on that front I have good news. According to MTGGoldfish, the only commonly played MH2 card that we didn't talk about at all is Foundation Breaker. It turns out that Living End really likes having an evoke Naturalize to fight through hate cards. And I think we missed it thanks to fatigue. Every time there've been cycling creatures or sacrifice effect creatures we've said something to the effect of "it may find a home in Living End." And we're often right. However, having done this for so many years, it just slipped our minds. We'd said it so often that it started losing meaning to us and we overlooked a key card in a popular deck.

And on that front I have good news. According to MTGGoldfish, the only commonly played MH2 card that we didn't talk about at all is Foundation Breaker. It turns out that Living End really likes having an evoke Naturalize to fight through hate cards. And I think we missed it thanks to fatigue. Every time there've been cycling creatures or sacrifice effect creatures we've said something to the effect of "it may find a home in Living End." And we're often right. However, having done this for so many years, it just slipped our minds. We'd said it so often that it started losing meaning to us and we overlooked a key card in a popular deck.

Missing a role player in what was at the time a newly resurgent deck is not that big a deal. We did at least identify all the potential playable cards from MH2 and were in the ballpark about how and why they'd see play. Overall, a good performance.

What Was Underestimated and Why?

We underestimated Dragon's Rage Channeler and to a lesser extent Unholy Heat and Murktide Regent. The problem on our end was experience. Jordan had tried years ago  to make Delirium Zoo a thing. He found that Gnarlwood Dryad was great with delirium and terrible without, and had to contort his deck to make Dryad work. DRC looks a lot like Dryad stat-wise, so Jordan's experience said that DRC would only be great sometimes, which cooled our expectations. What we missed was how powerful repeatable surveil would prove in an UR shell and that it would synergize so well with Heat and Regent that UR Thresh would supplant UR Prowess. Jordan was right about what they would do and how they'd play, but they're all far more playable than expected. This is a case of experience leaving us gun-shy.

to make Delirium Zoo a thing. He found that Gnarlwood Dryad was great with delirium and terrible without, and had to contort his deck to make Dryad work. DRC looks a lot like Dryad stat-wise, so Jordan's experience said that DRC would only be great sometimes, which cooled our expectations. What we missed was how powerful repeatable surveil would prove in an UR shell and that it would synergize so well with Heat and Regent that UR Thresh would supplant UR Prowess. Jordan was right about what they would do and how they'd play, but they're all far more playable than expected. This is a case of experience leaving us gun-shy.

Meanwhile, I was too cool on Prismatic Ending in retrospect. Jordan was totally right about how splashable it's proven to be. I thought that it would see a lot of play (and it has) but only in control decks. Instead, the opportunity cost is so low and the flexibility so high that it sees play in basically every multicolor deck with white in it.

What Was Overestimated and Why?

I was too hot on Rishadan Dockhand in my initial review. Dockhand really hasn't played out well in Modern. A lot of that comes down to how the format has shaken out, but I also  gushed too early. Tide Shaper was spoiled after I wrote that article and did what Merfolk needed much better. There was no way I could have seen that coming and nothing that made me expect that Dockhand would be superseded. And I did walk my assessment back a few weeks later.

gushed too early. Tide Shaper was spoiled after I wrote that article and did what Merfolk needed much better. There was no way I could have seen that coming and nothing that made me expect that Dockhand would be superseded. And I did walk my assessment back a few weeks later.

Abundant Harvest has seen less play than expected, but I've covered it and Sudden Edict already. However, we did think that all the anti-Tron cards would see more play. That hasn't happened, though whether that's the fault of the cards being worse than expected or metagame considerations isn't clear. Tron fell off massively along with MH2 and it may not be related. Thus this may be a case of waiting for the right meta rather than an overestimated power level. On a similar note, Domain Zoo hasn't lasted. It did well initially, but has fallen away as Living End and UR Thresh have risen. The cards did what we expected in the deck, but the deck itself didn't work out. Or maybe just not yet.

The Big Picture

Overall, I'm happy with how our predictions shook out. We didn't get everything exactly right, but most of that is how the metagame has evolved, which is out of our control. When we made mistakes it was a combination of jumping the gun and relying too much on history to make judgement calls. Experience informs how a card will play, but it isn't deterministic. Let the cards speak for themselves rather than be spoken for by pervious cards.

What Went Right

I feel as though our greatest strength this spoiler season was card evaluation. We were very good at assessing where cards fit into Modern. We didn't always get their contextual power right nor do we know if the deck will succeed, but we succeeded at evaluating a card's role.

I feel as though our greatest strength this spoiler season was card evaluation. We were very good at assessing where cards fit into Modern. We didn't always get their contextual power right nor do we know if the deck will succeed, but we succeeded at evaluating a card's role.

For example, I said that Counterspell largely replace the cheap counters but leave the expensive ones alone, and that's what's happened. I've also been proven right about the problems I identified with Grief, despite players desperately trying anyway. Jordan's evaluation of Urza's Saga and Prismatic Ending was right on the money (despite their places on his list). He also called out Fire // Ice and Suspend as playable cards when I didn't think either would see any play. We're good at understanding the cards. The metagame's the issue.

Lessons for Next Time

My lessons from MH2 spoilers are as follows:

- Focus on the cards themselves. The metagame is certain to shift and decks will fall and rise. We can't predict that, so focus on our strength and evaluate the cards.

- Focus on the cards in the current context. Circumstances and metagames change. Experience isn't always predictive, so don't let past experience dictate everything.

- Remember that everything is relative. We don't know what we don't know. Don't sweat getting it exactly right. Instead, if you're retrospectively wrong, be wrong for the right reasons.

Sooner Than Later

And this is timely because the teasers for the Innistrad split set are already starting. I get wanting to do multiple themes and having parallel stories, but splitting the fall set in two is a bit extreme. I guess we'll just have to see how this plays out. While wondering why they couldn't just do this as an old-school block.

When I bet on Modern, I'm betting on it being most popular format on MTGO. And only on MTGO. The simple fact is that it's the only way to measure Modern's popularity relative to other formats. Arena only has Standard and Historic and so can't answer wider question of most popular format when given a choice. Also, Arena's playerbase will be quite different from MTGO's thanks to Arena being free-to-play. (And yet somehow more expensive to build decks on.) It's critical to compare like-to-like and by excluding Arena, I don't risk comparing very different population compositions. Saying that Standard is the most popular because it's the main option on the most played digital Magic produc, since it's one of two options and cheaper than Historic, really isn't fair to Standard or other formats.

When I bet on Modern, I'm betting on it being most popular format on MTGO. And only on MTGO. The simple fact is that it's the only way to measure Modern's popularity relative to other formats. Arena only has Standard and Historic and so can't answer wider question of most popular format when given a choice. Also, Arena's playerbase will be quite different from MTGO's thanks to Arena being free-to-play. (And yet somehow more expensive to build decks on.) It's critical to compare like-to-like and by excluding Arena, I don't risk comparing very different population compositions. Saying that Standard is the most popular because it's the main option on the most played digital Magic produc, since it's one of two options and cheaper than Historic, really isn't fair to Standard or other formats. I'm also not going to compare the constructed formats to limited. It really isn't a fair comparison. In my experience, most competitive players will say their favorite format is booster draft, with a caveat. In said experience, players tend to extoll booster draft the concept as the most skill-intensive format and also most fun. Winning requires format knowledge, deck building skill, play skill, and luck more than other formats because you're building your deck as you go. However, the more this general opinion is investigated, the more exceptions, exclusions, and complications arise. Certain sets are more popular to draft than others. Draft participation also swings wildly from introduction to rotation. Thus, the exact timing of a study will wildly affect the data on limited, and it makes sense to cut it.

I'm also not going to compare the constructed formats to limited. It really isn't a fair comparison. In my experience, most competitive players will say their favorite format is booster draft, with a caveat. In said experience, players tend to extoll booster draft the concept as the most skill-intensive format and also most fun. Winning requires format knowledge, deck building skill, play skill, and luck more than other formats because you're building your deck as you go. However, the more this general opinion is investigated, the more exceptions, exclusions, and complications arise. Certain sets are more popular to draft than others. Draft participation also swings wildly from introduction to rotation. Thus, the exact timing of a study will wildly affect the data on limited, and it makes sense to cut it. This study is limited to data from MTGO. Sadly, there aren't enough paper results to draw any real conclusions. The few events that are out there don't really compare well to each other and they're not distributed evenly. Pre-pandemic, this whole project would have been easy since there were plenty of events of all levels in all formats. Plus, these events usually published their attendance numbers so comparing that would have fairly definitively answered the question. That isn't possible now.

This study is limited to data from MTGO. Sadly, there aren't enough paper results to draw any real conclusions. The few events that are out there don't really compare well to each other and they're not distributed evenly. Pre-pandemic, this whole project would have been easy since there were plenty of events of all levels in all formats. Plus, these events usually published their attendance numbers so comparing that would have fairly definitively answered the question. That isn't possible now. The various social media pages that kept track of weekly events have not been updated, and a number of stores went bust while others opened during the pandemic. Finding all the stores doing in-person events and asking them about attendance is far more work than I'm able and willing to do on my own. (With the right funding, on the other hand....)

The various social media pages that kept track of weekly events have not been updated, and a number of stores went bust while others opened during the pandemic. Finding all the stores doing in-person events and asking them about attendance is far more work than I'm able and willing to do on my own. (With the right funding, on the other hand....) Thus my study will include only MTGO results. But not all the constructed MTGO results. After some investigation, I decided to exclude both Standard and Vintage from the study. The only data I can collect requires tournament support, and the specialty and online-only formats don't provide that so they're out. Standard was cut because far more is played on Arena than MTGO. A look at Standard streamers basically always using Arena was strong evidence, as was Wizards and Star City Games running their Standard tournaments on Arena. So I assumed that the MTGO data would be lacking (and it was, I checked).

Thus my study will include only MTGO results. But not all the constructed MTGO results. After some investigation, I decided to exclude both Standard and Vintage from the study. The only data I can collect requires tournament support, and the specialty and online-only formats don't provide that so they're out. Standard was cut because far more is played on Arena than MTGO. A look at Standard streamers basically always using Arena was strong evidence, as was Wizards and Star City Games running their Standard tournaments on Arena. So I assumed that the MTGO data would be lacking (and it was, I checked). With Vintage, it was purely a judgement call. Vintage has accessibility issues which keep newer players out. The cost of Vintage cards is so high that it really can't be played in paper. Thus, Vintage players have mostly moved online. However, the lack of overlap between Vintage decks other formats means that it's hard to transition to Vintage naturally. There's more overlap between Standard and Modern cards than Legacy and Vintage, for example. It's an enthusiast's format, and I was told by several sources that the community is quite static. While it'd be a reasonable comparison point for the other, more variable formats to compare to the old-timer, I decided that the extra effort wasn't worthwhile just to learn that Vintage is a fairly insular and stable group compared to other formats.

With Vintage, it was purely a judgement call. Vintage has accessibility issues which keep newer players out. The cost of Vintage cards is so high that it really can't be played in paper. Thus, Vintage players have mostly moved online. However, the lack of overlap between Vintage decks other formats means that it's hard to transition to Vintage naturally. There's more overlap between Standard and Modern cards than Legacy and Vintage, for example. It's an enthusiast's format, and I was told by several sources that the community is quite static. While it'd be a reasonable comparison point for the other, more variable formats to compare to the old-timer, I decided that the extra effort wasn't worthwhile just to learn that Vintage is a fairly insular and stable group compared to other formats. I am going to measure the relative popularity of the tournament-supported constructed formats on MTGO. These are Pioneer, Modern, Pauper, and Legacy. To accomplish this, I gathered three sources of data. The first is from each formats Tournament Practice room. Over last Sunday afternoon, I watched each room for 10 minutes apiece, recording each time a match fired. This source indicates the relative number of players in each format by measuring how quickly a match could be found. More matches means more players means more popular.

I am going to measure the relative popularity of the tournament-supported constructed formats on MTGO. These are Pioneer, Modern, Pauper, and Legacy. To accomplish this, I gathered three sources of data. The first is from each formats Tournament Practice room. Over last Sunday afternoon, I watched each room for 10 minutes apiece, recording each time a match fired. This source indicates the relative number of players in each format by measuring how quickly a match could be found. More matches means more players means more popular. Preliminaries fired each week. The sample included each complete week in July and the first week of August. I'll then compare the average Preliminaries per week to the theoretical max to indicate the most popular Preliminary format.

Preliminaries fired each week. The sample included each complete week in July and the first week of August. I'll then compare the average Preliminaries per week to the theoretical max to indicate the most popular Preliminary format. The answer is Modern. By quite a large margin. And I'm fairly certain that I missed a few Modern matches as at several points there were multiple players creating and then closing matches to join someone else's. So it should be a higher blowout. The Pioneer room was practically dead, saved because there was some kind of non-Wizards tournament happening and the players were starting their matches. Pauper and Legacy were quite comparable, which was surprising. I'd been told Pauper was in a very bad place and wasn't seeing play, but that seems to have been inaccurate.

The answer is Modern. By quite a large margin. And I'm fairly certain that I missed a few Modern matches as at several points there were multiple players creating and then closing matches to join someone else's. So it should be a higher blowout. The Pioneer room was practically dead, saved because there was some kind of non-Wizards tournament happening and the players were starting their matches. Pauper and Legacy were quite comparable, which was surprising. I'd been told Pauper was in a very bad place and wasn't seeing play, but that seems to have been inaccurate. That's another point in Modern's column. It not only had the highest average in absolute terms with 4.8, but the highest percentage. Both Modern and Pioneer had 8 Preliminaries scheduled, so 4.8/8=60% of Modern Preliminaries fired versus 1.8/8=22.5% for Pioneer. Legacy records 2.8/6=46.7% for a strong second place.

That's another point in Modern's column. It not only had the highest average in absolute terms with 4.8, but the highest percentage. Both Modern and Pioneer had 8 Preliminaries scheduled, so 4.8/8=60% of Modern Preliminaries fired versus 1.8/8=22.5% for Pioneer. Legacy records 2.8/6=46.7% for a strong second place. Modern has the largest Challenges, followed by Legacy. Pauper has the least, with 150 points separating it from Modern. It's clearly not a highly played format right now. Though I'd actually argue Pauper is actually the best value for entry. It only took 6 points to make Top 32 of several of these events, which is two match wins. That's not a very big hill to climb to get prizes. I thought Legacy would be higher since the Legacy crowd tend to be vocal, but the stats don't lie, it's just above Pioneer and well below Modern.

Modern has the largest Challenges, followed by Legacy. Pauper has the least, with 150 points separating it from Modern. It's clearly not a highly played format right now. Though I'd actually argue Pauper is actually the best value for entry. It only took 6 points to make Top 32 of several of these events, which is two match wins. That's not a very big hill to climb to get prizes. I thought Legacy would be higher since the Legacy crowd tend to be vocal, but the stats don't lie, it's just above Pioneer and well below Modern.

from

from  It's extremely good in Hammer Time because that deck only needs a few lands and Saga fills a number of holes in the deck. Older versions struggled with running out of threats in general but particularly suffered when they didn't hit Stoneforge Mystic to find their hammers. Saga makes threats and then Mystics for a Hammer. Or other bullet if necessary. Being good in a specific deck doesn't make a card a pillar.

It's extremely good in Hammer Time because that deck only needs a few lands and Saga fills a number of holes in the deck. Older versions struggled with running out of threats in general but particularly suffered when they didn't hit Stoneforge Mystic to find their hammers. Saga makes threats and then Mystics for a Hammer. Or other bullet if necessary. Being good in a specific deck doesn't make a card a pillar. Remember a

Remember a  that consistently just squeaks into Top 32 the same weight as one that Top 8’s. Using a power ranking rewards good results and moves the winningest decks to the top of the pile and better reflects its metagame potential.

that consistently just squeaks into Top 32 the same weight as one that Top 8’s. Using a power ranking rewards good results and moves the winningest decks to the top of the pile and better reflects its metagame potential. strength vs. popularity. Measuring deck strength is hard. There is no

strength vs. popularity. Measuring deck strength is hard. There is no  As previously mentioned, Heliod Company did perform very often. But when it does, it does very well and took the top slot, indicated that it was quite underplayed in July. Thresh and Hammer Time appearing in the middle of the pack is actually fairly worrying for them, as it again suggests that they were more popular than actually good. However, they're enough above baseline to say that their population is justified.

As previously mentioned, Heliod Company did perform very often. But when it does, it does very well and took the top slot, indicated that it was quite underplayed in July. Thresh and Hammer Time appearing in the middle of the pack is actually fairly worrying for them, as it again suggests that they were more popular than actually good. However, they're enough above baseline to say that their population is justified.

therefore constitute overplaying. A tempo deck using Remand is appropriate since all it wants is to delay the opponent. Lots of

therefore constitute overplaying. A tempo deck using Remand is appropriate since all it wants is to delay the opponent. Lots of  Does the card stand on its own or require help? A card that is good by itself in a wide variety of contexts is no parasite. However, if a card absolutely needs others to be good, let alone playable, then it is one. This isn't a problem by itself, as tribal cards are inherently parasitic and there's no issue with their playability. The problem comes when a card is a parasite but the parasitism isn't obvious. Champion of the Parish is highly parasitic but nobody would ever run it without support, meaning it will only see play in the right context. Thus, it wouldn't meet my definition of overplayed.

Does the card stand on its own or require help? A card that is good by itself in a wide variety of contexts is no parasite. However, if a card absolutely needs others to be good, let alone playable, then it is one. This isn't a problem by itself, as tribal cards are inherently parasitic and there's no issue with their playability. The problem comes when a card is a parasite but the parasitism isn't obvious. Champion of the Parish is highly parasitic but nobody would ever run it without support, meaning it will only see play in the right context. Thus, it wouldn't meet my definition of overplayed. Conversely, Chalice of the Void doesn't need much support from its own deck to do its thing. However, its only meaningful in the context of the opponent's deck, which is still parasitic in the

Conversely, Chalice of the Void doesn't need much support from its own deck to do its thing. However, its only meaningful in the context of the opponent's deck, which is still parasitic in the  than the alternative? No card is ever actually a free include. There's always an alternative that could be played and therefore there is always an opportunity cost to every card. However, a card with a low opportunity cost will either have few alternatives or be significantly better than said alternatives. For a high one, the opposite is true. To wit: the opportunity cost of Lightning Bolt is its alternative, Lightning Strike (same effect, different cost). As Bolt is cheaper, that opportunity cost is very low. However, in the context of removal spells, Bolt may be quite high, as there are a wide range of one-mana kill spells and depending on metagame context, it may prove expensive to include Bolt over something else.

than the alternative? No card is ever actually a free include. There's always an alternative that could be played and therefore there is always an opportunity cost to every card. However, a card with a low opportunity cost will either have few alternatives or be significantly better than said alternatives. For a high one, the opposite is true. To wit: the opportunity cost of Lightning Bolt is its alternative, Lightning Strike (same effect, different cost). As Bolt is cheaper, that opportunity cost is very low. However, in the context of removal spells, Bolt may be quite high, as there are a wide range of one-mana kill spells and depending on metagame context, it may prove expensive to include Bolt over something else. Force of Negation is a good card, and at times it has been a necessary card. It's the most flexible free counterspell in Modern. Disrupting Shoal never saw much play because it's hard to use. So Force is the only means most decks have of protecting themselves against opponent's the turn they tap out. This is very important against control and combo decks. The problem is that Force is only actually free on the opponent's turn. Even then, you only want to counter really important spells, and only if absolutely necessary. There's a reason that Force of Will gets cut in fair matchups in Legacy.

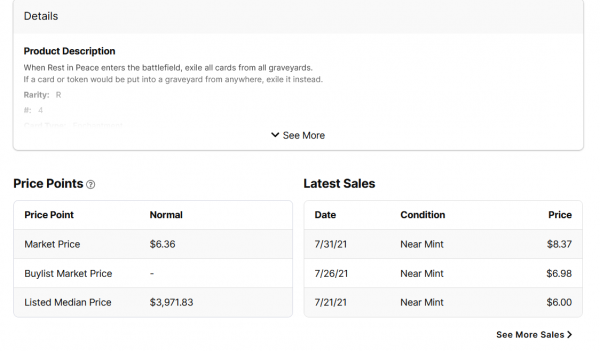

Force of Negation is a good card, and at times it has been a necessary card. It's the most flexible free counterspell in Modern. Disrupting Shoal never saw much play because it's hard to use. So Force is the only means most decks have of protecting themselves against opponent's the turn they tap out. This is very important against control and combo decks. The problem is that Force is only actually free on the opponent's turn. Even then, you only want to counter really important spells, and only if absolutely necessary. There's a reason that Force of Will gets cut in fair matchups in Legacy. I like Sanctifier en-Vec. However, players have taken to playing it instead of Rest in Peace in decks that formerly ran Rest. The thinking is that all the cards that most decks want to exile are red, because they're thinking of Dragon's Rage Channeler decks. Having a protection from red creature is really good against those decks, so Sanctifier does the job of Rest and Auriok Champion, freeing up sideboard space. The catch is that Sanctifier doesn't actually answer Dragon's Rage Channeler because it can't exile artifacts, lands, or blue cards. And that's not even considering white and green decks.

I like Sanctifier en-Vec. However, players have taken to playing it instead of Rest in Peace in decks that formerly ran Rest. The thinking is that all the cards that most decks want to exile are red, because they're thinking of Dragon's Rage Channeler decks. Having a protection from red creature is really good against those decks, so Sanctifier does the job of Rest and Auriok Champion, freeing up sideboard space. The catch is that Sanctifier doesn't actually answer Dragon's Rage Channeler because it can't exile artifacts, lands, or blue cards. And that's not even considering white and green decks. The most hyped red one-drop from MH2 was Ragavan, Nimble Pilferer. The most played one has proved to be DRC. It may be this hype and the dream of stealing opposing cards which has led many players to run Ragavan in decks that cannot support him. And Ragavan needs a lot of support.

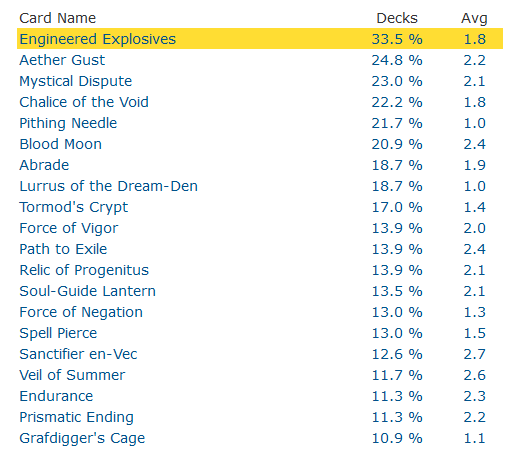

The most hyped red one-drop from MH2 was Ragavan, Nimble Pilferer. The most played one has proved to be DRC. It may be this hype and the dream of stealing opposing cards which has led many players to run Ragavan in decks that cannot support him. And Ragavan needs a lot of support. It was watching a UW Control deck Aether Gust a Primeval Titan three times and then die once it resolved that got me thinking about overplayed cards in the first place. Gust is a tempo card that somehow mostly sees play in non-tempo decks, primarily control decks. Which want to permanently remove things, not delay them to be answered again. Were Gust seeing play in UR, there'd be no problem. But it's the high control play that earns Gust it's spot.

It was watching a UW Control deck Aether Gust a Primeval Titan three times and then die once it resolved that got me thinking about overplayed cards in the first place. Gust is a tempo card that somehow mostly sees play in non-tempo decks, primarily control decks. Which want to permanently remove things, not delay them to be answered again. Were Gust seeing play in UR, there'd be no problem. But it's the high control play that earns Gust it's spot.